Fixing a fifteen-year-old curve fit bug

The backwards compatibility of Perl software is wonderful. That’s why it’s all the more jarring when you find a package that doesn’t work. This is the story of a 15-year-old bug that I managed to track down and, fortunately, resolve.

Y’know, I hate it when software doesn’t “just work”; both as a user and as a developer. Usually, something like this distracts me and I want to work out what the problem is before I can continue with whatever I was doing beforehand. Sometimes, diving down the rabbit hole doesn’t lead anywhere and I have to find a workaround and move on. Other times I get lucky and I work out what the bug was and can fix it. This is one of those lucky times.

There’s something magical about diving into solving a bug. I’m able to find real focus, time flies and I come out the other end tired yet invigorated. I really enjoy these kinds of bug-hunting puzzles and I seem to be fairly good at it.1 But maybe I’m just overly stubborn (in a good way!).

I like to understand why something doesn’t work and then see if I can find a solution. Also, if I find a solution, I like to understand why a solution works. After all, sometimes while poking around, I stumble on something that suddenly works. Yet I find in such cases that it’s always good to understand why it works. That way I can be more sure that the solution really does solve the underlying problem.

As I mentioned above, one of the great things about Perl is that often code from a decade or so ago “just works”. The Perl people put a lot of effort into ensuring backwards compatibility. That’s why it’s so surprising when something doesn’t work, as with the situation I recently found myself in.

The backstory

So how did I get into this situation? Well, I wanted to run some old code of mine to process Arctic sea-ice extent data and see what the results look like now. To do that, I needed to install the project’s dependencies. Unfortunately, running

$ cpanm --installdeps .

failed. Specifically, the distribution2

Algorithm::CurveFit (which

I use to fit a curve to the sea-ice extent data) failed its tests and hence

wouldn’t install. Weird. That used to work without a problem. Hrm.

I checked the dist’s info on meta::cpan, but it

hadn’t been updated since 2010. In other words, there hadn’t been any recent

changes to cause the failure. Since I’d written my program in 2014 and

hadn’t changed it since 2018, I’d expected everything to still run as

normal. Also weird.

I browsed through the code on meta::cpan and found that it was plain Perl:

it didn’t use any XS or anything which looked special. In other words, this

should still “just work”.3 More weird.

Then I found out that this has been a known problem since 2017 and that there was a ticket for the issue on RT. The gist of the ticket was that the dist had stopped working on Debian stretch, whereas it used to work on Debian jessie.

Hrm, interesting. I wonder why?

I was starting to get pulled into the bug’s curiosity vortex. I thought, “I’ll have a quick look at this, maybe I can spot something”. Famous last words. Down the rabbit hole I plunged…

Rebuilding the past

So where to start? My first instinct was to try and recreate the past. After all, the dist used to pass its test suite on Debian jessie with Perl 5.18 (the last Perl version I’d used). Thus, if I could see it working in the old environment, maybe I could spot where things had changed. This would give a working reference system and a baseline so I could see what “correct” looked like.

To recreate this past state, I spun up a

Vagrant virtual machine with Debian jessie.

Here’s the configuration I used in my Vagrantfile:

config.vm.define "bare-test-jessie" do |bare_test_jessie|

bare_test_jessie.vm.box = "debian/jessie64"

bare_test_jessie.vm.network "private_network", ip: "192.168.23.107"

bare_test_jessie.vm.network "forwarded_port", guest: 22, host: 2207, id: "ssh"

end

Why did I use 107 at the end of the private network address and 2207 for the

forwarded port? Well, this is simply the seventh entry in my Vagrantfile.

There’s nothing special about the number other than to keep my Vagrant VMs

separate. Note that it’s not necessary to specify the forwarded port; I do

so to avoid any port conflicts when running multiple VMs.

Spinning up the new VM was a simple matter of:

$ vagrant up bare-test-jessie

Upon startup, I got this error:

The following SSH command responded with a non-zero exit status.

Vagrant assumes that this means the command failed!

apt-get -yqq update

apt-get -yqq install rsync

Stdout from the command:

WARNING: The following packages cannot be authenticated!

rsync

Stderr from the command:

W: Failed to fetch http://security.debian.org/dists/jessie/updates/main/source/Sources 404 Not Found [IP: 151.101.194.132 80]

W: Failed to fetch http://security.debian.org/dists/jessie/updates/main/binary-amd64/Packages 404 Not Found [IP: 151.101.194.132 80]

W: Failed to fetch http://httpredir.debian.org/debian/dists/jessie/main/source/Sources 404 Not Found [IP: 199.232.190.132 80]

W: Failed to fetch http://httpredir.debian.org/debian/dists/jessie/main/binary-amd64/Packages 404 Not Found [IP: 199.232.190.132 80]

E: Some index files failed to download. They have been ignored, or old ones used instead.

E: There are problems and -y was used without --force-yes

This is often the first stumbling block when working with an old system: the

APT repository is no longer available from the original address as listed in

the VM. I.e. in this case, there’s no entry for jessie at the URLs

http://httpredir.debian.org/debian or http://security.debian.org/.

There’s also the related problem that APT repositories no longer use plain

HTTP–they have to use HTTPS these days–but that’s not the underlying issue

here.

Fortunately, the APT repository is still available as part of the Debian

distribution archives. This means

I only needed to update the URLs in /etc/apt/sources.list to point to the

archive.4

Although the error looked bad (with a wall of red text) the VM was up;

rsync had failed to install, nothing more. Other than that, everything

was running. Thus, I could ssh into the VM and set things up further:

$ vagrant ssh bare-test-jessie

Opening up vi5 on /etc/apt/sources.list

$ sudo vi /etc/apt/sources.list

I changed the content to look like this:

deb http://archive.debian.org/debian/ jessie main non-free contrib

deb-src http://archive.debian.org/debian/ jessie main non-free contrib

deb http://archive.debian.org/debian-security/ jessie/updates main non-free contrib

deb-src http://archive.debian.org/debian-security/ jessie/updates main non-free contrib

Now it was possible to update the package list and install some software:

$ sudo apt update

$ sudo apt -y upgrade

$ sudo apt -y --force-yes install vim curl build-essential

Note that the --force-yes option was necessary because some of the

packages can’t be authenticated due to their age. This is another one of

those things one has to deal with when working with legacy systems.

I installed the vim package because, although vim was installed, it only

ran in vi compatibility mode. I needed the vim command because that’s

what my muscle memory expects. Old habits die hard.

I also needed curl so that I could do the curl <url> | bash dance to

install perlbrew. The build-essential package

was, erm, essential so that I could build Perl from source.

Installing perlbrew and setting it up looked like this:

$ curl -L https://install.perlbrew.pl | bash

$ source ~/perl5/perlbrew/etc/bashrc

$ echo 'source ~/perl5/perlbrew/etc/bashrc' >> ~/.bashrc

Installing Perl looked like this:

$ perlbrew --notest install perl-5.18.4

$ perlbrew switch 5.18.4

$ perlbrew install-cpanm

I chose to install Perl 5.18.4 because that was the most recent version I’d

used in my program way back in 2018. The reasoning being that if

Algorithm::CurveFit had worked with that version back then, it should work

with that version in a Debian jessie VM now.

Unfortunately, the cpan/Time-HiRes/t/nanosleep.t test fails (the only one

to fail in the entire Perl test suite); hence it’s necessary to use the

--notest option for the installation to complete successfully.

After switching to the newly-installed Perl version, and installing cpanm

in that environment, I was ready to try installing Algorithm::CurveFit to

see if it works in my rebuild of the past:

$ cpanm Algorithm::CurveFit

Which worked! I.e. it built, tested and installed as expected. This was my

baseline test: now I had a system configuration in which

Algorithm::CurveFit worked (meaning, its test suite passed).

Now I needed to be able to dig around in the code and change things. To do that I needed to get the source tarball and unpack it:

$ wget https://cpan.metacpan.org/authors/id/S/SM/SMUELLER/Algorithm-CurveFit-1.05.tar.gz

$ tar -xvzf Algorithm-CurveFit-1.05.tar.gz

Changing into the directory created by tar, building the Makefile, and

running the test suite from the source tarball, I was able to make sure that

this code also worked as I expected:

$ cd Algorithm-CurveFit

$ perl Makefile.PL

Checking if your kit is complete...

Looks good

Generating a Unix-style Makefile

Writing Makefile for Algorithm::CurveFit

Writing MYMETA.yml and MYMETA.json

$ make test

cp lib/Algorithm/CurveFit.pm blib/lib/Algorithm/CurveFit.pm

PERL_DL_NONLAZY=1 "/home/vagrant/perl5/perlbrew/perls/perl-5.18.4/bin/perl" "-MExtUtils::Command::MM" "-MTest::Harness" "-e" "undef *Test::Harness::Switches; test_harness(0, 'blib/lib', 'blib/arch')" t/*.t

t/00pod.t ........ skipped: Test::Pod 1.00 required for testing POD

t/00podcover.t ... skipped: Test::Pod::Coverage 1.00 required for testing POD coverage

t/01basic.t ...... ok

t/02bad_deriv.t .. ok

All tests successful.

Files=4, Tests=20, 2 wallclock secs ( 0.04 usr 0.03 sys + 1.30 cusr 0.09 csys = 1.46 CPU)

Result: PASS

It wasn’t necessary to install the dependencies in this step, because the

cpanm Algorithm::CurveFit call from earlier had already installed them.

To make absolutely sure that the test which fails “in the future” still

worked on Debian jessie (and to ensure that I was using the local library,

and not the one I’d installed globally via cpanm

earlier),6 I ran the test via perl directly:

$ perl -Ilib t/02bad_deriv.t

1..13

ok 1

ok 2

ok 3

ok 4

ok 5

ok 6

ok 7

ok 8

ok 9

ok 10

ok 11

ok 12

ok 13

This was all the detail I could get at this point. I.e. the test suite could only tell me that the tests had passed.

Even so, now with a solid, working baseline reference system I was able to move forward and try to work out why the code didn’t work in the present day.

A quick peek before digging in

So, with a working reference system, what to do now? Simply staring at the code and mulling over what it does wasn’t a good strategy at this stage because I’d not yet interacted with the code and seen how information flows through the system. Still, having a quick skim read of the code was a good idea, just to get a feel for the code’s structure and generally to get a bird’s eye view before looking for places to dig deeper.

Looking through the code, the dist consists of a single Perl module with about 250 lines of code excluding docs and comments. The module calculates a best fit of a given function to an input set of data based upon an initial guess of the function’s coefficients. The code seemed quite intricate, yet it was well commented and 250 lines of code seemed manageable to understand.

Poking the system to gain a feel for the software

Ok, so what does the test failure look like on “later-than-jessie” systems?

$ perl -Ilib t/02bad_deriv.t

1..13

ok 1

ok 2

not ok 3

# Failed test at t/02bad_deriv.t line 50.

ok 4

ok 5

ok 6

ok 7

ok 8

ok 9

ok 10

ok 11

ok 12

ok 13

# Looks like you failed 1 test of 13.

Unfortunately, that’s not very informative. Looking in the RT

ticket,

SREZIC mentions that it’s possible to

get a bit more information about why the tests in 02bad_deriv.t fail by

using cmp_ok. Taking his advice, I appended these tests:

cmp_ok($v + $eps[$par], ">", $val[$par]);

cmp_ok($v - $eps[$par], "<", $val[$par]);

and ran the test file again (perl -Ilib t/02_bad_deriv.t), getting this

output:

not ok 5

# Failed test at t/02bad_deriv.t line 52.

# '5'

# >

# '5.15'

As my Master’s supervisor used to ask me quite a lot: “Ya good, but what does it mean?”. Good question. Dunno. Yet.

Looking at the test code more closely, we have this snippet:

my @val = (5.15, 0.01, 0.100, 1.045);

my @eps = (1.00, 0.10, 1.000, 0.100);

foreach my $par (0..$#parameters) {

my $v = $parameters[$par][1];

ok(defined $v);

ok($v + $eps[$par] > $val[$par]);

ok($v - $eps[$par] < $val[$par]);

cmp_ok($v + $eps[$par], ">", $val[$par]);

cmp_ok($v - $eps[$par], "<", $val[$par]);

}

which includes the newly added cmp_ok calls.

This loops over a set of parameters and compares their calculated values (in

the @parameters array defined earlier in the test) with their expected

values (in the @val array) to within a given tolerance for each (specified

in the @eps array). What the test failure output is telling us is that we

expect the value for the first element in the @val array to be greater

than 5.15, but its actual value plus its tolerance (the first element of the

@eps array) is 5. Since the first value of the @eps array is 1.00, the

calculated value must be 4. Checking the initial guess of what the

parameters should be (from earlier in the test)

my @parameters = (

[ 's', 4.0, 0.01 ],

[ 'c', 0.01, 0.001 ],

[ 'y0', 0.1, 0.001 ],

[ 'x0', 1.0535, 0.01 ],

);

we see that the s parameter is initially set to 4.0, which suggests that

this is the parameter not achieving its expected value. Was I 100% sure?

Well, no. I like to be very sure that I’ve understood everything properly

before moving onwards, so it’s best to have a look at the parameters.

Fortunately, the author had left some debugging code in the test:

#use Data::Dumper;

#print Dumper \@parameters;

Reactivating this (i.e. uncommenting it) and running the tests again, I got:

$ perl -Ilib t/02bad_deriv.t

1..13

$VAR1 = [

[

's',

4,

'0.01'

],

[

'c',

'0.0471889540880574',

'0.001'

],

[

'y0',

'0.1',

'0.001'

],

[

'x0',

'1.0535',

'0.01'

]

];

which shows the parameter values after having calculated the fit.

So there ya go: the first parameter is called s, its final value is 4

(as I calculated above) and its accuracy is

0.01.7

Staring at the test code I realised that the @parameters array must be

updated in-place. It didn’t make any sense to set the @parameters to an

initial guess and then expect (as part of the test assertions) that its

values had changed after fitting the curve. That was a good thing to know

and a piece of the puzzle to set aside for later. I might not need it, but

ya never know.

So what was this output telling me? The main point was that s wasn’t

changing at all: it had the value of 4 both before and after running the

curve fitting algorithm.8 That’s weird because in the Debian

jessie code, we know that the test passes, which means that its final value

should be close to 5.15. Why doesn’t this value change anymore?

A numerical stability problem?

I’d like to say that I used a very focused and analytical approach to how I

continued, but I can’t: I turned to plain old print debugging.

Somehow I feel ashamed to admit that I’d simply used print. The thing is,

it’s easy to use, it’s flexible, and it allows me to see how the values I’m

interested in evolve at a single glance. What can I say?

Data::Dumper is your friend!

Staring at the code again, I realised that it iteratively updates the

parameters of the function to fit within a main while

loop.

This seemed to be the best place to look for anything funny going on. Thus,

I started sprinkling print Dumper $<variable_name>; statements in various

places, while comparing the output from the jessie system and from my

bullseye system (where the tests were failing).

I got lucky: one thing stuck out. The @cols variable used as input to

Math::MatrixReal->new_from_cols(),

which creates a

matrix of datapoints X parameters

had a very large number turn up in its output. Like, HUGE. Where other

numbers in the array were sitting between roughly -2.0 and 2.0, one number

was 9.22229939811846e+20.9 Having done a fair bit of

numerical computational work as part of my PhD and later while working on

simulation systems and scientific computing, I smelled a numerical precision

issue floating10 around somewhere. I noticed that part of the code

used

derivatives,

hence I guessed two numbers on a denominator somewhere were getting too

close to one another, causing the value in the @cols array to explode.

Ok, so if we’re calculating derivatives, what are we calculating derivatives of? The test tries to fit the following formula to the input data:

formula => 'sqrt( s*(x-x0)^2 + c) + y0'

Formatting this a bit more nicely, we have:

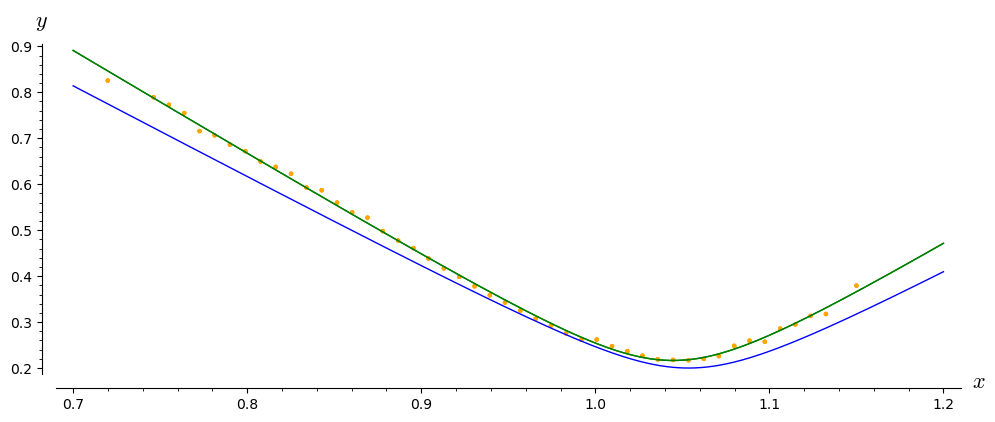

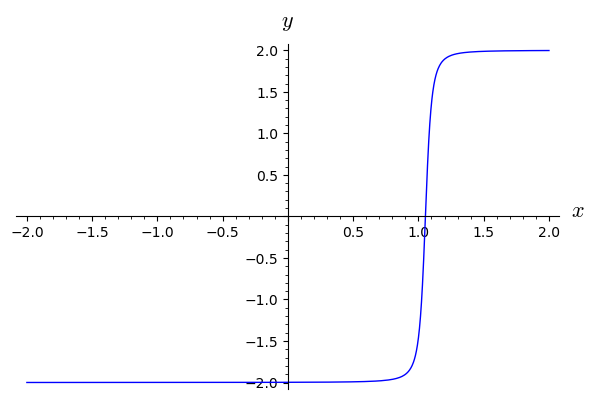

\[f(x) = \sqrt{ s(x-x_0)^2 + c} + y_0\]What does this function look like? Seeing a function’s form when plotted can give a feel for how it behaves. I defined the function in the Sage mathematics software system and plotted it

# initial guess parameters

s = 4

y0 = 0.1

x0 = 1.0535

c = 0.01

# the fit function

f = sqrt(s*(x-x0)^2 + c) + y0

# plot the function and save it to file

p = plot(f, (x,-2, 2), axes_labels=['$x$', '$y$'])

p.save('algorithm-curvefit-bug-fit-function.png')

which produced this image:

Hrm, this function is “interesting” just past \(x = 1\). As the test filename suggests, this could be a function with a “bad derivative”.

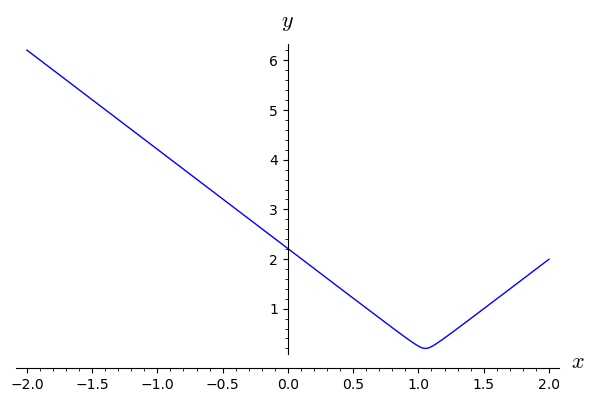

Of course, now the question is: what’s the derivative? Again, using Sage, we can work this out:

# calculate the derivative of the fit function

f.derivative(x)

=> s*(x - x0)/sqrt(s*(x - x0)^2 + c)

which, written more nicely, looks like this

\[f'(x) = \frac{s (x - x_0)}{\sqrt{s (x - x_0)^2 + c}}\]Plotting this function over the same range, we have

Yup, this definitely has a funky derivative, especially just above \(x = 1\). Now I was more sure that this is why the test file has the name it does. This indicates that around this value, we might be getting numerical instabilities. I decided to look closer.

Letting \(x = x_0\) in the derivative \(f'(x)\), we get

\[f'(x_0) = \frac{s (x_0 - x_0)}{\sqrt{s (x_0 - x_0)^2 + c}} = 0\]In other words, this is where the derivative crosses the \(y\) axis. The only numerically interesting thing is happening in the denominator, which is

\[\sqrt{s (x - x_0)^2 + c}\]but setting \(x = x_0\) here only gives

\[\sqrt{c}\]which is nonzero (and not particularly small), so I didn’t think this would be causing any nasty numerical problems. Sure, the derivative changes a lot around \(x = x_0\), but it doesn’t seem like anything which could be causing a numerical instability.

Not being satisfied with the numerical instability explanation, I decided to

look at the code more closely. There is a chunk of code close to the

beginning of the main while

loop

which finds the value of the derivative for each \(x\) point of the function

to fit and eventually adds these values to the @cols array. This chunk of

code is split into two blocks: the first calculates the derivative if the

$deriv variable is a CODE reference, and the second otherwise. Both

blocks have a section where the code falls back to a numerical method if the

derivative isn’t a defined value.

When running the test t/02bad_deriv.t, only the second block is executed.

I found that there’s one case where the fallback is triggered: when the

\(x\) value from the input data is equal to \(x_0\) (i.e. where the

derivative changes the most). To calculate a value when the derivative is

undefined, the code uses a closure called

$formula_sub

which (as far as I could tell) returns the value of the function to fit at

this \(x\) value,11 thus providing a substitute for the

undefined derivative value.

When the $function_sub closure calculates the function to fit at x0, we

can simplify the function \(f(x)\) to

which is non-zero and seems well-behaved. Again, this doesn’t look like a numerical issue.

I then investigated the values that $function_sub returns as well as its

input parameter values. I found that the enormous value appears exactly

when x is equal to x0. Given the input parameter values and the known

value for x, I calculated the value $function_sub should return. Using

the above expression for \(f(x_0)\) and the values of c and y0, I got:

This shouldn’t be hard for a computer to calculate. Hence I think I can stomp on the numerical instability hypothesis and will have to find a different cause for the anomalous large numbers.

Structure in data and code points the way

Wild goose chases aren’t exactly uncommon when debugging code. But then, serendipity also plays a role. So it was in this case for me.

While “print debugging” the parameter values passed into $function_sub,

I noticed a difference in structure between separate calls. Sometimes the

structure looked like this:

$VAR1 = 'x';

$VAR2 = '1.0535';

$VAR3 = 's';

$VAR4 = [

's',

4,

'0.01'

];

$VAR5 = 'c';

$VAR6 = [

'c',

'0.0467904042605691',

'0.001'

];

$VAR7 = 'y0';

$VAR8 = [

'y0',

'0.1',

'0.001'

];

$VAR9 = 'x0';

$VAR10 = [

'x0',

'1.0535',

'0.01'

];

and sometimes like this:

$VAR1 = 'x';

$VAR2 = '0.7199';

$VAR3 = 's';

$VAR4 = 4;

$VAR5 = 'c';

$VAR6 = '0.0467904042605691';

$VAR7 = 'y0';

$VAR8 = '0.1';

$VAR9 = 'x0';

$VAR10 = '1.0535';

Looking back, this should have made some warning bells go off. Perhaps I was tired. All I thought at the time was, “hrm, that’s odd”. However, the thought was not lost. It got stored somewhere in the back of my mind, ready to make pennies drop later.

Digging through the rest of the code, I happened to notice that

$formula_sub was called in other locations, this time not involved in

derivative calculations. In this other situation, it assisted in the

calculation of the

residuals.

Here’s the code for comparison, once embedded in the derivative calculation:

$value = $formula_sub->($xv, @parameters)

and again in the residuals calculation:

my @beta =

map {

$ydata[$_] - $formula_sub->(

$xdata[$_],

map { $_->[1] } @parameters

)

} 0 .. $#xdata;

The second instance isn’t that clear, so removing some of the surrounding code and reformatting it to be on one line, we get

$formula_sub->($xdata[$_], map { $_->[1] } @parameters)

This passes the current value of x and the function’s parameter values as

a list of arguments into $formula_sub. The parameter values are stored in

the second element of the sub-array within each element of the @parameters

array, hence the $_->[1} bit.

I noticed that this had the same rough form as the derivative calculation code

$value = $formula_sub->($xv, @parameters)

Hang on! What was that map doing in there?

And then something clicked. The difference in how $formula_sub was called

was the reason for the difference in parameter structure within

$formula_sub that I’d noticed earlier.

I then looked again at the parameter values appearing in $formula_sub.

Instead of a value being associated with each parameter, there was an

array containing the parameter’s metadata being associated with each

parameter. Not only is this doubled up, it’s fairly obviously wrong.

With this new perspective, I realised that this was the underlying problem:

the parameters weren’t being passed to $function_sub correctly when

calculating the fallback derivative. What was happening was that the

@parameters array was being concatenated with the scalar value of the

current x value. That’s not correct: $formula_sub expects only a list

of argument values, not an array-of-arrays concatenated onto a scalar.

What I guessed was probably happening (and now I’m getting into uncharted

territory) is that a memory reference value was being passed to

$formula_sub for one of the parameter values instead of the actual

parameter value. A memory reference value could be any weird value and

it wouldn’t surprise me if this was the source of the huge number I was

seeing.

Striving for a deeper explanation

Wait a minute. If this is the cause of the approximately 1e+20 number, and the code is the same now as it was on the jessie system, that means this issue should also exist on the jessie system. Hrm.

Looking at the $function_sub value at x == x0 on my rebuilt jessie

system, there it was: a huge number. This time it was

216930334118.067,9 which is of the order of 1e+11.

This means the issue has been lurking since (at least) 2010.

So what’s going on here? Why does the code work on the older system but not on the newer one?

My attempt at an explanation is this: on the jessie system, 1e+11 wasn’t large enough to stop the fitting algorithm from converging to a sensible solution. However, when this number is as large as 1e+20, it dominates so much (as one would expect: it’s 9 orders of magnitude larger than that on the jessie system) that the fitted solution never deviates from the initial guess. I also found that increasing the maximum number of iterations doesn’t help convergence to the correct value, because the solution “converges” after only 3-4 iterations.

Of course, now the question becomes: why is the outlier function value on jessie approximately 1e+11 but on later systems it has jumped to approximately 1e+20?

My best guess is that it might be due to a change of integer precision in something, somewhere between jessie and stretch. For instance, consider that the maximum integer values for 32-bit and 64-bit are:

MAX_INT 32 bit: 2,147,483,647 => 2e+09

MAX_INT 64 bit: 9,223,372,036,854,775,807 => 9e+18

Note that both of these numbers are two orders of magnitude smaller than the

large numbers I was seeing on jessie and later systems, respectively. Could

we just be seeing MAX_INT*100 being converted into a floating point value?

I honestly don’t know, and this is where the trail becomes hard to follow.

There are now more questions than I think I can definitively answer:

- does this point to a libc change? The main C library

libcchanged from 2.19 to 2.24 between jessie and stretch. - is this due to the GCC version change? This went from 4.9.x in jessie to 6.x in stretch.

- in the change from GCC 4 to GCC 5 (which was on the way to GCC 6 in

stretch), the default mode for C was changed from

-std=gnu89to-std=gnu11, which is a pretty large jump! Maybe this is a cause?

In each case, I couldn’t find any evidence for something like an integer precision change. Of course, that doesn’t mean it doesn’t exist; it merely means I didn’t find anything. At the end of the day, I couldn’t find a reason and can only speculate that where once a 32-bit integer was used, now a 64-bit integer is the default, hence causing the large change between the outlier values I observed.

What is interesting, I find, is that the outlier numbers are very similar to

the respective MAX_INT value on each system. By this, I mean that on my

jessie test system, the huge outlier number (as a string of numbers) looked

like approximately MAX_INT32*100 each time I ran it. The same occurred on

stretch and later systems: the outlier consistently looked like

MAX_INT64*100. It’s a hint that I might be on the right track with my

guess about an integer precision change, but it’s only that: a hint.

Avoiding further speculation on the exact cause of this change, I decided to put it into the “too hard basket”, accept it, and move on.

Bug fix

What’s great about the analysis I made earlier is that I’ve also stumbled

upon the correct way to pass parameters into $function_sub and thus the

fix is rather simple. Changing the calls to $function_sub where the

derivatives are calculated from

$value = $formula_sub->($xv, @parameters)

to

$value = $formula_sub->($xv, map { $_->[1] } @parameters)

resolves the problem, and the test suite now passes. Yay! ![]()

Also, $formula_sub now returns the value 0.2 when x == x0 as we

calculated at the end of the “A numerical stability problem?”

section. Also nice.

That’s it. That’s the bug fix. Quite an anti-climax in the end…

First contact

Since the dist’s author hadn’t been active on this project since roughly 2010, I’d guessed that he’d moved on and the email address probably wasn’t active anymore. I mean, this is understandable: it’s open-source software and maintainers have lives and end up doing other things. On the off chance that emails do still get through, I sent him a message … and he replied! I was totally stoked!

After having a thorough look around, unfortunately he was unable to find any

old source code repositories on his computers at home. The original source

code repository used to be http://svn.ali.as/cpan/trunk/Algorithm-CurveFit/,

which no longer exists. Thus we thought that the only code available now

would be the tarball on CPAN. Consequently, he created a GitHub repo for

Algorithm::CurveFit making

commits corresponding to each version number. This was the best solution to

create a repo for what looked like lost information.

Later, he found out that the original repository was buried within the historical archive of Adam Kennedy’s Open Repository, which is hosted on GitHub. Thus, we’ve been able to find the original code with commits. This is brilliant as then I can do a bit of repository archaeology and try to work out what the author was thinking when he implemented various things.

As yet, he’s not had time to extract the commits from the

Algorithm-CurveFit subdirectory of the TheOpenRepository repository into

the new

p5-Algorithm-CurveFit

repo. Doing so would be nice as it would recreate the state of the original

repository. Interestingly enough, I blogged about this process a few years

ago. The short answer

about how to do this is to use git subtree

split.

Also, he’s very kindly given me COMAINT on the project. Many thanks to SMUELLER for taking the time to help me out!

As soon as the bug

fix is reviewed, I’ll

roll out a bug-fix release. Then I can get my old project back up and

running again. ![]()

Open question: the five-point stencil

Although I’m pretty sure I’ve stomped on the bug that sent me on this journey, I now have more questions than when I started. I’d hoped the original repo’s commit messages would answer some, but it looks like the information has been lost to the winds of time.

One thing in particular that interests me is the five-point stencil calculation mentioned in the code. Was this ever implemented? From the code, it looks like it was started but never finished. The module was released as if it had been implemented, because this concept is mentioned in the CHANGELOG. Asking the author about this he too thinks that, from looking at the code, it wasn’t implemented. Of course, details are scarce because it’s a been long time since he wrote the module.

The author mentioned a five-point stencil fix for Algorithm::CurveFit in

a bug report in the Math::Symbolic

dist, so it seems that

the implementation was intended. Given that the $h and $t variables are

assigned but never used around where one would expect a five-point stencil

calculation, it could be that this was implemented locally but somehow never

made it into the release that mentions it.

It seems that the function value returned from $function_sub when

calculating the derivative should actually be turned into a derivative via

the five-point stencil method. I guess my worry is that I’ve solved one bug

only to have uncovered another, potentially deeper issue. In other words,

in certain corner cases, the module calculates incorrect values. That would

be bad.

It’s also entirely possible that this was merely a simple oversight and now

I’ve got the opportunity to fix it. That would be nice

![]()

W(h)ither software?

While working on this I had all kinds of philosophical thoughts. For instance: how to maintain such projects? Should we just let them wither on the vine and die? Should we fork them? At the end of the day, who takes care of our ageing software?

I don’t have answers to these questions, but I think that software that people are using should at least be able to be maintained and kept up to date. Fortunately, open-source software allows anyone to help out, meaning that it’s at least possible to keep small projects like the one discussed here alive and kicking.

Wrapping up

So that’s it! Another bug found, another bug squashed. Thanks for coming along for the ride!

-

I’m available for freelance Python/Perl backend development and maintenance work. Contact me at paul@peateasea.de and let’s discuss how I can help solve your business’ hairiest problems. ↩

-

Often, the words “module”, “distribution” and “dist” (short for “distribution”) are used interchangeably. Yet, a “distribution” is the term for one or modules bundled together. Often, a distribution contains a single module, hence why the terms get used interchangeably. ↩

-

I’m beginning to sound like a cracked record, now aren’t I? ↩

-

I originally spotted this tip in the Debian forums. ↩

-

Although the

vicommand exists and actually runsvim, this is onlyvim-tiny, which runsviminvicompatibility mode. Thus thevimcommand doesn’t exist and to be able to use it one has to install thevimpackage after first fixing thesources.listand doing theupdate/upgradedance. ↩ -

I did mention that I’m stubborn, didn’t I?

↩

↩ -

The meaning of these elements I gleaned from the module’s POD. ↩

-

I realised much later that almost none of the parameters had changed from their initial guesses: only

chad been updated slightly. ↩ -

The exact number changes with each run of the test suite. ↩ ↩2

-

Pun not intended. ↩

-

Note that this is rather than the value of the derivative. This is odd because in the context where this is called, the code expects a derivative. ↩

Support

If you liked this post and want to see more like this, please buy me a coffee!